What is Large Language Model?

It’s essentially an advanced form of generative AI, specifically designed to understand and generate human language, trained on vast amounts of text to respond to queries, write content, or perform language-related tasks with remarkable proficiency.

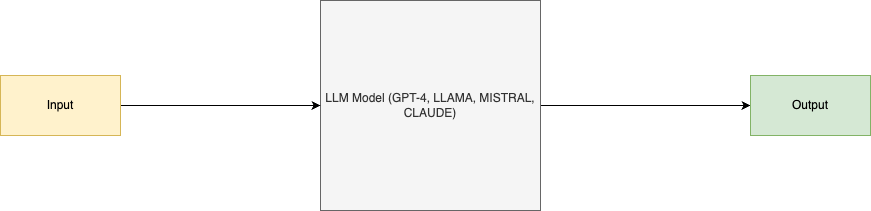

I’ll explain LLM using a straightforward example: picture it as a mysterious black box. You feed it some input, perhaps a snippet of text, and it then presents you with an output.

Okay. But I’m unable to relate it with chat-gpt or gemini? Can you explain how chat-gpt fits into this picture?

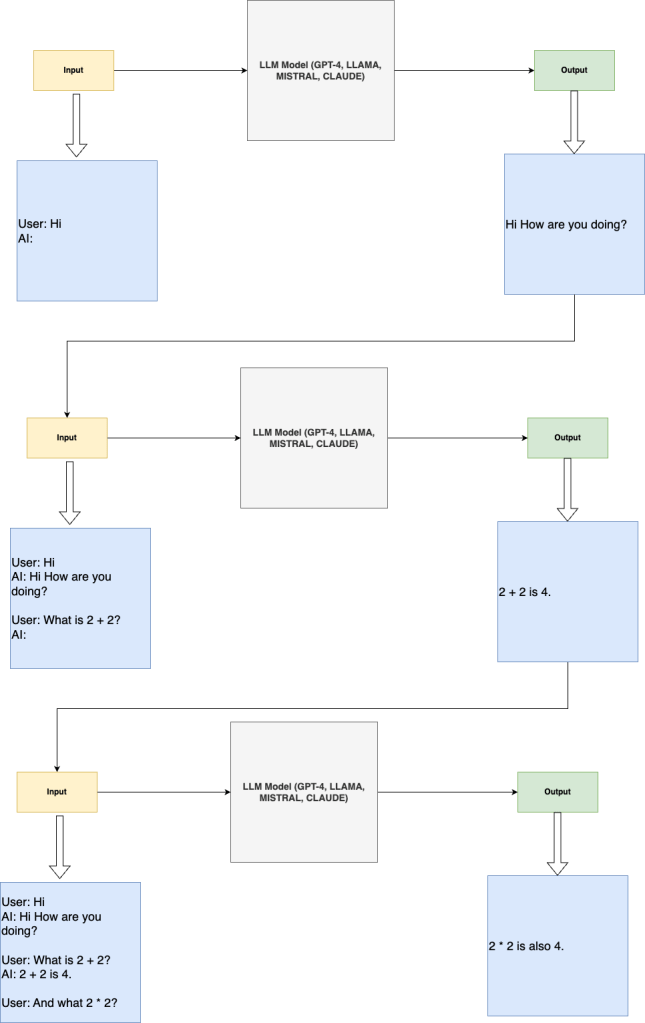

ChatGPT and Gemini (previously known as Bard) are essentially applications built upon the foundation of Large Language Models (LLMs). Each message you send adds to the input data that these LLMs process. In essence, for an LLM, every interaction is treated as a single input string, expanding its dataset with every communication. Look at the image below:

Alright! Now I get it. So does that mean that the input keeps on increasing and I AI will forever remember my chat history?

Every Large Language Model (LLM), including the ones used in applications like ChatGPT, has a maximum capacity for the amount of input it can process, measured in tokens (which can be words or parts of words). For instance, the GPT-4-turbo model, which powers ChatGPT, can handle up to 128,000 tokens. This means that as you continue to input messages, the system, in this case, ChatGPT, begins to trim earlier messages from the input string to make room for new information.

Got it! So how will you control the behaviour of AI in an application if it keeps on forgetting message?

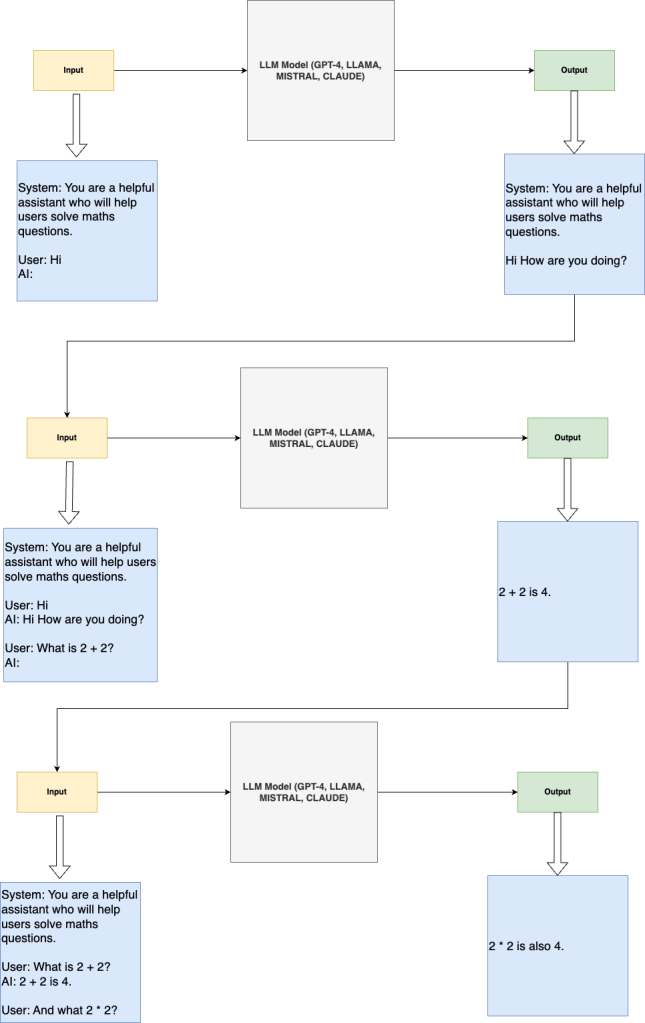

When developing an application that utilizes a Large Language Model (LLM), it’s common practice to maintain a constant instruction or system prompt within the input. This means that while the chat history may be shortened to accommodate new inputs, the initial instruction prompt remains unaltered and always present.

when the user sends a third message, the application automatically deletes the older message to make space, yet the system prompt remains unchanged. This makes sure the AI behaves as intended. Chat History can be controlled by the end application based on the input length of the LLM.